Lab 2 C&W Attack

In my previous post we explored the use of FGSM a powerful yet simple attack method for generating adversarial examples for LLMs. What happens if we need something more subtle and sophisticated?

Enter the Carlini and Wagner (C&W) attack — a method that iteratively optimises the perturbation to the input to maximise the loss function which generates adversarial examples with minimal distortion.

How does the C&W attack work?

At its core, the C&W attack balances two objectives:

- Fool the model — ensuring perturbed inputs are misclassified.

- Minimize distortion — keeping the changes small enough to be almost imperceptible to humans.

The attack can be formulated as the following optimisation problem:

Where:

- is the perturbation we're trying to find

- is the p-norm of the perturbation (typically p = 0, 2, or ∞) a way of measuring how much the input changes.

- is a constant that balance between fooling the model and minimising the distortion.

- is an objective function that encourages misclassification

Unlike the FGSM attack, the C&W attack is an iterative process that refines the perturbation each time, until it finds the smallest perturbation that fools the model.

Why is it better than FGSM?

There are are a few reasons the C&W attack is considered more effecitve than FGSM

- Stealth: It creates more realistive adversarial examples with less noise.

- Adaptive: It can be used to bypass a variety of defences that are used for simpler attacks, like FGSM.

- Generalisable: The method can be applied to a variety of inputs and is not specific to image interpretation. It can be used against language models by subtly manipulating token embeddings.

The lab!

View the Lab RepositoryThe C&W attack is essentially an interative FGSM attack. We iteratively update the perturbation until the model misclassifies the input.

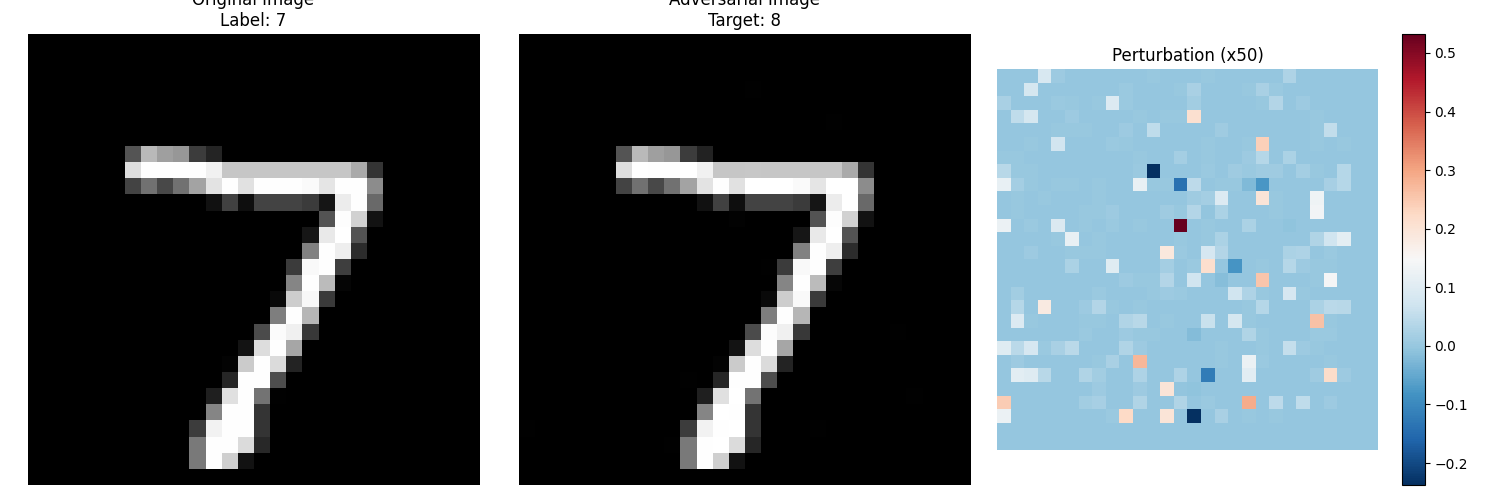

In this image, you can see:

- The original input that is correctly classified, it correctly assumes that the image is of a "7"

- The image sign, this is the perturbation that is added to the image to create the adversarial example. It often looks like a "negative" or abstract pattern. Almost like a heatmap, highlighting the areas that the AI relies on to make the decision.

- The resulting adversarial example that fools the model, it now incorrectly assumes that the image is of a "8"

Unlike the FGSM example where you could perceive the difference between the original and adversarial image, here the difference is much more subtle. The perturbation shown in the image shows the perceived changes magnified.

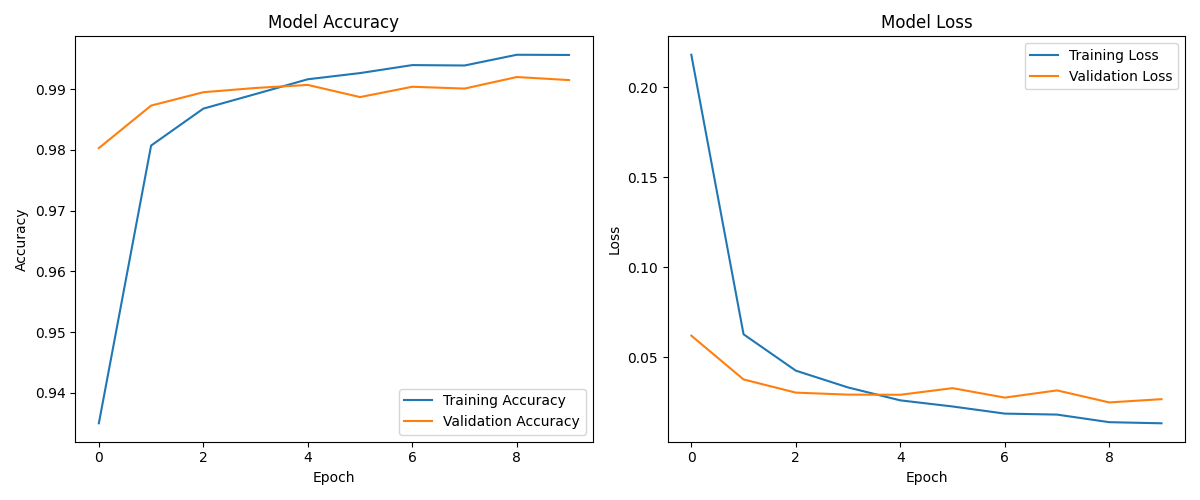

We have modeled accuracy and model loss over the epochs to show how the attack progresses.

python main.py

Test accuracy: 0.9922

Starting C&W attack - Target label: 7

Epoch 1000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 2000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 3000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 4000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 5000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 6000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 7000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 8000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 9000 - Loss: 0.000000 - Accuracy: 0.9922

Epoch 10000 - Loss: 0.000000 - Accuracy: 0.9922

Adversarial identification: 8

How do we defend against it?

Similarly to the FGSM attack and the defenses are similar, these look like:

- Adversarial Training: Train the model on adversarial examples.

- Defensive Distillation: Use a teacher model to generate soft probabilities for the student model.

- Gradient Masking: Mask the gradients to make it more difficult for the attacker to generate adversarial examples.

- Input Preprocessing: Preprocess the input to make it more robust to adversarial examples.

When training models you should use the adversarial_training method to train a model on both clean and adversarial inputs.

If you're running a TensorFlow or PyTorch model, you could:

- Augment training with adversarial examples (Adversarial Training).

- Apply feature squeezing (reducing bit-depth).

- Add randomized smoothing (introducing controlled noise).

This would help make the model more robust to adversarial examples.